In today’s digital landscape, securing your website with an SSL certificate is crucial for protecting sensitive data and establishing trust with your users. Whether you’re running a web server on a cloud-based virtual private server (VPS) or a local machine behind a network address translation (NAT) gateway, properly configuring your setup to accept and verify SSL certificates is essential. In this comprehensive guide, we’ll walk you through the steps involved in setting up your machine to handle SSL certificates seamlessly.

Before going further, you should make sure the following is already one:

- Update DNS records:

- Log in to your domain registrar’s control panel or the DNS management interface provided by your DNS hosting provider.

- Create an A record for your domain (yourdomain.com) and point it to the public IP address of the server where your website is hosted.

- Create another A record for the www subdomain (www.yourdomain.com) and point it to the same IP address.

- If your server has a static IP address, you can directly enter the IP address in the A records.

- If your server has a dynamic IP address, you may need to use a dynamic DNS service to automatically update the IP address whenever it changes.

- Wait for DNS propagation:

- After updating the DNS records, it may take some time for the changes to propagate across the internet.

- DNS propagation can take anywhere from a few minutes to a few hours, depending on various factors.

- You can use online tools like “What’s My DNS” or “DNS Checker” to verify that your DNS records have propagated correctly.

Understanding the Difference: Cloud VPS vs. NAT Network

Before we dive into the setup process, it’s important to understand the distinction between a cloud-based VPS and a network that utilizes NAT.

Cloud VPS (e.g., Amazon EC2):

- A cloud VPS, such as Amazon Elastic Compute Cloud (EC2), provides a virtualized server environment that is directly accessible from the internet.

- With a cloud VPS, you typically have a public IP address assigned to your instance, eliminating the need for port forwarding.

- The cloud provider manages the underlying network infrastructure, simplifying the setup process.

NAT Network:

- In a NAT network, your web server is located behind a router or firewall that translates between private and public IP addresses.

- To make your web server accessible from the internet, you need to configure port forwarding on your router to direct incoming traffic to the appropriate internal IP address and port.

- NAT networks are commonly found in home or small office settings where a single public IP address is shared among multiple devices.

Step 1: Preparing Your Web Server

To provide a hands-on experience, let’s walk through the process of setting up a fictitious WordPress installation on an Apache web server and configuring SSL using Let’s Encrypt. We’ll focus on the Apache, Certbot, and SSL configuration steps.

Install Apache Web Server:

- Open a terminal or SSH into your server.

- Update the package manager:

sudo apt update - Install Apache:

sudo apt install apache2 - Verify that Apache is running by accessing your server’s IP address or domain name in a web browser. You should see the default Apache page.

Install Certbot and Obtain an SSL Certificate:

- Install Certbot, the Let’s Encrypt client, and the Apache plugin:

sudo apt install certbot python3-certbot-apache - Obtain an SSL certificate using Certbot:

sudo certbot --apache -d yourdomain.com -d www.yourdomain.com

Replaceyourdomain.comwith your actual domain name. - Follow the prompts to provide an email address and agree to the Let’s Encrypt terms of service.

- Choose whether to redirect HTTP traffic to HTTPS (recommended for better security).

- Certbot will automatically configure Apache with the obtained SSL certificate.

Configure SSL in Apache:

- Open the Apache SSL configuration file:

sudo nano /etc/apache2/sites-available/default-ssl.conf - Verify that the following lines are present and uncommented:

SSLCertificateFile /etc/letsencrypt/live/yourdomain.com/fullchain.pemSSLCertificateKeyFile /etc/letsencrypt/live/yourdomain.com/privkey.pem

Replaceyourdomain.comwith your actual domain name. - Save the file and exit the text editor.

Enable SSL in Apache:

- Enable the Apache SSL module:

sudo a2enmod ssl - Enable the default SSL virtual host:

sudo a2ensite default-ssl - Restart Apache to apply the changes:

sudo systemctl restart apache2

Verify SSL Configuration:

- Open a web browser and access your WordPress site using

https://(e.g.,https://yourdomain.com). - Verify that the website loads securely with a valid SSL certificate. You should see a padlock icon in the browser’s address bar.

- Click on the padlock icon to view the SSL certificate details and ensure that it is issued by Let’s Encrypt and valid for your domain.

Additional Configuration:

- To ensure that your SSL certificate remains valid, set up automatic renewal using Certbot. You can create a cron job or systemd timer to run the renewal command regularly.

- Consider implementing HTTP Strict Transport Security (HSTS) to enforce HTTPS connections and improve security.

- Regularly monitor your SSL configuration and keep your web server software up to date to address any potential vulnerabilities.

Note: Remember to replace yourdomain.com with your actual domain name throughout the configuration process.

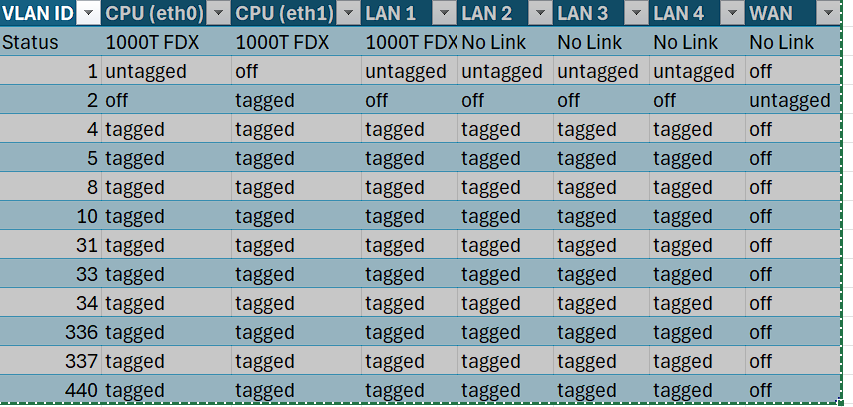

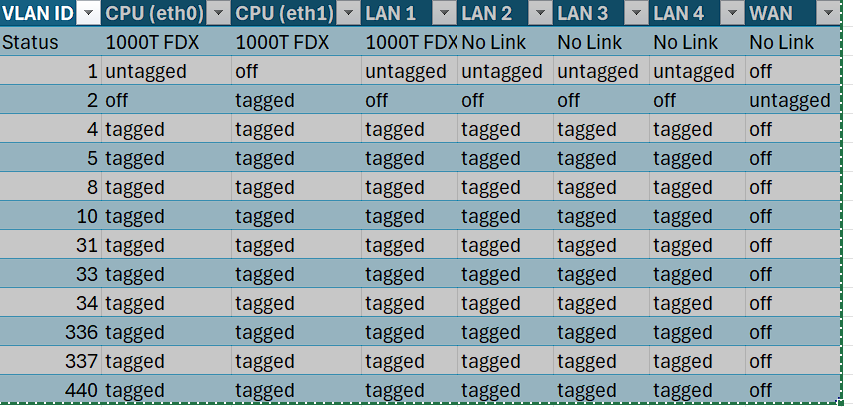

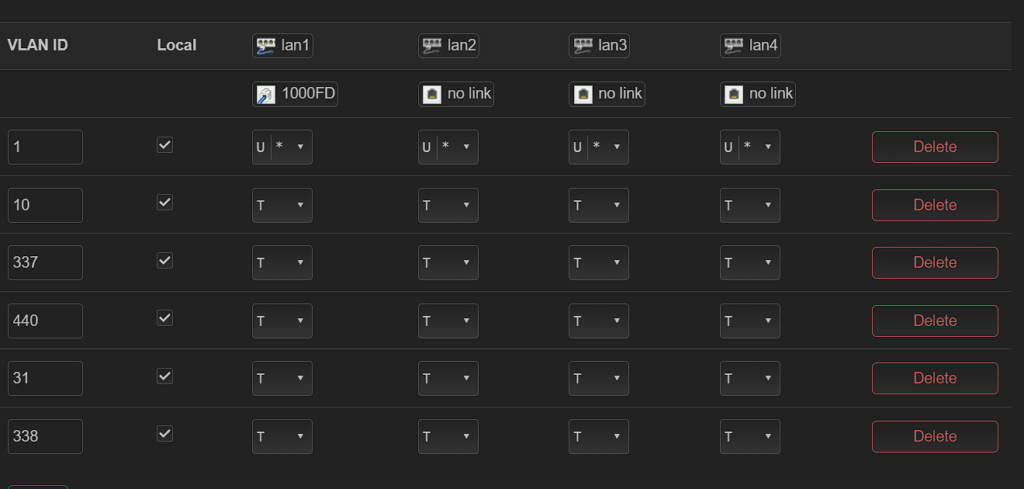

Step 2: Network Configuration

The network configuration steps vary depending on whether you’re using a cloud VPS or a NAT network.

Cloud VPS (e.g., Amazon EC2):

- No additional network configuration is required since your VPS has a public IP address.

- Ensure that the necessary ports (e.g., port 443 for HTTPS) are open in your VPS’s security group or firewall settings.

NAT Network:

- Access your router’s administrative interface, typically through a web browser.

- Locate the port forwarding or virtual server settings.

- Create a new port forwarding rule specifying the following:

- External port: 443,80 as the verification may be done via http, dns

- Internal IP address: The private IP address of your web server

- Internal port: The port on which your web server is listening (e.g., 443 or 80)

- Save the port forwarding rule and restart your router if necessary.

Step 3: Testing and Verification

Once you have completed the web server and network configuration, it’s time to test and verify that your SSL certificate is properly set up.

Access Your Website via HTTPS:

- Open a web browser and enter your website’s URL preceded by

https://(e.g.,https://www.example.com). - Verify that the website loads securely without any SSL-related errors or warnings.

Check Certificate Details:

- Click on the padlock icon in the browser’s address bar to view the SSL certificate details.

- Ensure that the certificate is issued to your domain name and is valid and trusted.

Perform SSL Server Test:

- Use online tools like SSL Labs (https://www.ssllabs.com/ssltest/) to perform a comprehensive SSL server test.

- The test will evaluate your SSL configuration and provide a detailed report highlighting any potential issues or vulnerabilities.

Conclusion

Setting up a machine to accept and verify SSL certificates is a critical step in securing your website and protecting your users’ data. By following the steps outlined in this guide, you can configure your web server and network to handle SSL certificates effectively, regardless of whether you’re using a cloud VPS or a NAT network. Remember to regularly monitor and update your SSL configuration to ensure ongoing security and compliance with industry standards.

Additional Resources:

- Let’s Encrypt: https://letsencrypt.org/

- Apache SSL/TLS Encryption: https://httpd.apache.org/docs/current/ssl/

- Nginx SSL Termination: https://docs.nginx.com/nginx/admin-guide/security-controls/terminating-ssl-http/

- Amazon EC2: https://aws.amazon.com/ec2/

- SSL Labs Server Test: https://www.ssllabs.com/ssltest/

By taking the time to properly set up your machine for SSL certificate verification, you can enhance the security and trustworthiness of your website, providing a safe and secure experience for your users.